How Udemy Generates More Revenue with Smart A/B Testing

-

News and UpdatesUpdatedPosted:

On this page

Like most “growth hacks,” the value of A/B testing has been lost in the rush for game-changing results today.

As any experienced marketer or entrepreneur knows, there are no silver bullets when it comes to scaling a business. A/B testing, like every other tactic, has its place in the marketing toolbox. Used correctly, it can drive incremental gains. Many incremental gains lead to traction, which can lead to real growth.

A/B testing is as important for the mindset as it is the data. A commitment to A/B testing is an indicator that you’re running a data-driven business. Going with your gut has its place too – usually alongside a carefully crafted control.

So what constitutes a meaningful A/B test? First, let’s take a quick look at the scientific method, which lays a good foundation for running tests that produce valid results.

Here is a brief but useful definition of the scientific method from ScienceBuddies.org:

The scientific method is a process for experimentation that is used to explore observations and answer questions. Scientists use the scientific method to search for cause and effect relationships in nature. In other words, they design an experiment so that changes to one item cause something else to vary in a predictable way.

Let’s quickly see how that applies to marketing, specifically email.

- Ask a question: Why don’t more people click our emails?

- Do background research: What are best practices for email copy?

- Construct a hypothesis: More people will click our emails if we integrate behavioral data.

- Test with an experiment: Test existing copy (control) against new, dynamic copy (variable).

- Analyze data and draw conclusions: Behavioral data added context to emails, therefore driving more clicks.

- Implement learnings: Include behavioral data in email campaigns, move on to next test.

Done correctly, A/B testing produces exponential improvement. They key is implementing a series of onboarding, lifecycle and retention emails, then testing them rigorously, as opposed to A/B testing subject lines in every promotional email you send.

Testing one-off emails produces one-off learnings. If you were to send that same campaign again, you could improve on it. But since you aren’t, you can’t apply your learnings to the next email. Testing automated campaigns, however, means you can constantly improve the results. A steady flow of new users provides continual feedback and, if you are committed to the data, you can regularly update copy, images, buttons, triggers and timing to send the best possible emails.

It’s also important to consider that testing small variations will likely produce small variations in the results. As Noah Kagan wrote on the Visual Website Optimizer blog, only one in eight A/B test drives significant change. Unless you’re Amazon or TripAdvisor, your data pool isn’t large enough that decimal points matter. If Email A has a 15.2% click rate and Email B has a 14.8% click rate, did Email A really win? You can’t be sure, and you likely didn’t put enough creative effort into a truly varied A/B test.

Udemy: A/B Testing in Action

With more than seven million students, Udemy has a massive user base to test. That creates really meaningful results, which Udemy uses to constantly iterate on their email campaigns.

Jen Rhee, Udemy’s email marketing manager, was kind enough to share the results of two recent A/B tests with us. Jen is a leader in this fast growing organization, and our conversation confirmed that she is methodical in her approach to growth.

Let’s dive into the examples.

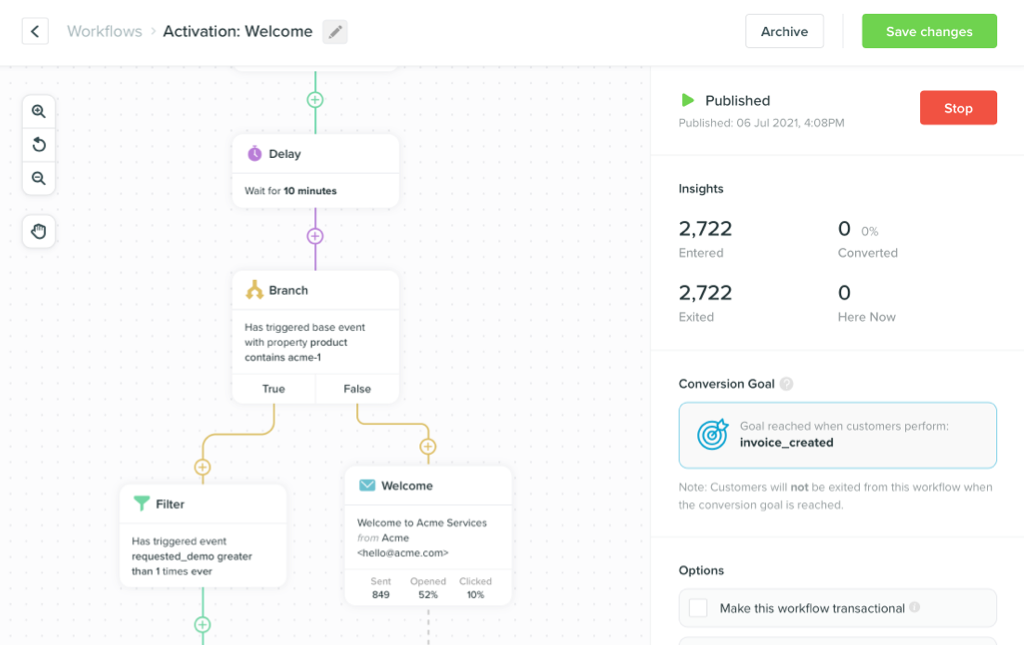

Example #1: The Welcome Email

We often said that your welcome email sets the tone for your relationship with new users, but Jen has data to back that up. She has found that revenue generated in the first week correlates with an increased customer lifetime value. That means the welcome email could set the trajectory for what could be a valuable relationship. It could also prevent that relationship from ever happening.

To improve these emails, Jen put their existing welcome email (the control) up against a new one (the variable). The control email welcomed new users with 50 percent off any course. It’s an enticing deal but it’s also very open-ended. Even though the email included some suggested courses, the email header was pretty general.

Jen suspected that suggesting a course right off the bat would drive more early revenue, which would increase lifetime value and set the tone for a great customer experience. Her variable email leveraged behavioral data to serve up a course that the user had already expressed interest in. She included that course in the email header, along with the same 50 percent off coupon.

Before I tell you the results, consider that you probably already know which email performed better. Jen felt the same way, but ran the test anyway. Assumptions are just that – baseless suppositions that ought to be verified.

As expected, the second email was a smashing success. It generated 150 percent more revenue in the first week, and 94 percent revenue in the first 30 days.

Takeaway: Behavioral data adds context to emails. Each time this is email is triggered, the content is unique. This is personalization at its finest. Suggesting one course, as opposed to just offering a coupon, removes friction from the buying process, making it easier for new users to get started.

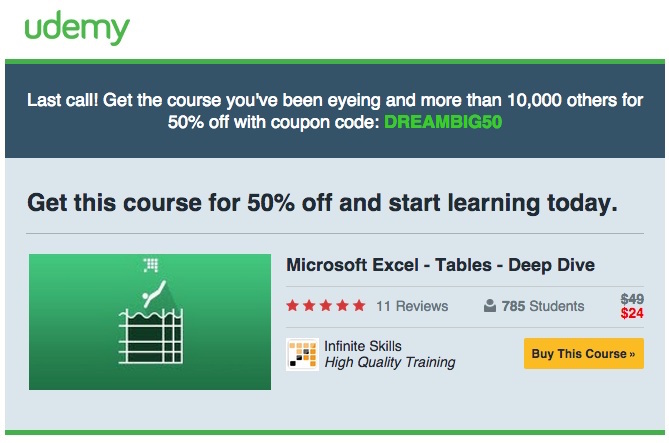

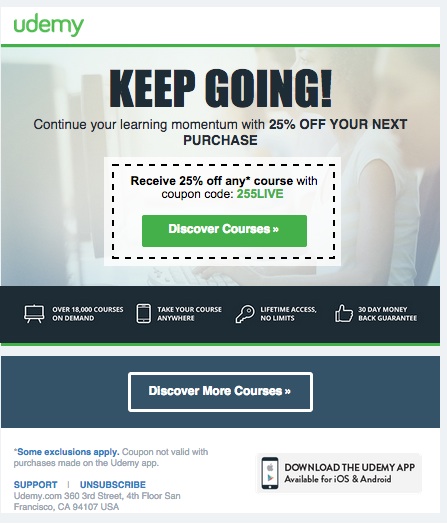

Example #2: The Lifecycle Email

In marketing, you’re often either fighting inertia or riding momentum.

Email is one of the primary ways to build on momentum, which can lead to happier, more engaged customers. With this in mind, Jen began testing their “post enroll” email campaign, which was triggered after users purchased their first course.

“We got some insight from our users that they purchase a course with goals in mind,” Jen told us. “If they buy a Photoshop course, they want to create beautiful designs. If they take an app development course, they want to build apps.”

To improve Udemy’s lifecycle emails, Jen ran a test similar to the one above. The existing campaign offered a coupon code with a generic call to action to “Discover Courses.”

She tested this against a benefits-oriented header that said, in the case of users who bought app development courses, “Make An App That Everyone Will Want” along with a coupon code.

As you might expect, the second email was a runaway hit. It generated 60 percent more short-term revenue, and 132 percent more long-term revenue.

Takeaway: As Jen told us, “Customizing the creative based on the student’s goals performed better than focusing on coupons alone.” It’s a classic case of benefits vs. features that Udemy has been able to leverage for a higher customer lifetime value.

The Secret Sauce

The key to Jen’s success at Udemy hasn’t been coming up with creative test ideas or even designing and implementing the tests. Jen actually reports on her findings and uses the knowledge to improve the emails. As the campaigns run and collect more data, she tests another idea and iterates again.

A/B testing is a cycle, not a campaign. The next time you arbitrarily A/B test your newsletter’s subject line, don’t expect tangible results. The real insights stem from a culture of testing and a commitment to data.

Do you have questions for Jen or about A/B testing in general? Leave a note in the comments.